Compute Express Link (CXL)

Compute Express Link Guide and Resources

Compute Express Link technology is rapidly evolving to address memory allocation and data storage challenges brought on by artificial intelligence and machine learning applications. Advanced analysis solutions from VIAVI help operators to support the unique test and validation requirements of the CXL standard.

Productos

-

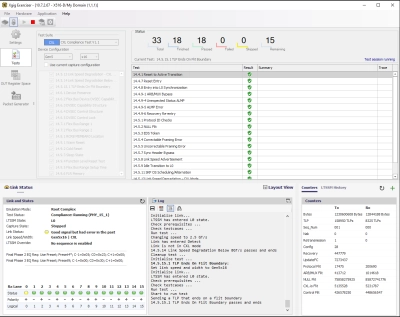

Xgig 6P16 Analyzer / Exerciser for PCI Express 6.0

Fully integrated, 16-lane PCIe6 Analyzer/Exerciser solution with support for CXL and NVMe

-

Xgig 5P16 Analyzer / Exerciser / Jammer Platform for PCI Express 5.0

Combines A/E/J functionality on the same platform with support for the latest PCIe, NVMe and CXL specifications, and...

-

Xgig Exerciser for CXL v1.1/v2.0

Approved for CXL Gold Suite compliance testing, the CXL Exerciser generates CXL data streams and responses for...

As a participating member of the CXL Consortium, VIAVI has recognized the growing industry consensus surrounding Compute Express Link topology. Decades of high-speed interconnect testing experience have uniquely qualified VIAVI to lead vital CXL decode validation efforts and participate in ongoing specification development with a customer-centric perspective.

An industry-leading portfolio of PCIe® protocol analyzers, jammers, exercisers, and test software spanning multiple generations of the standard has allowed VIAVI to pivot naturally to CXL test processes over the same physical interface. Real time metrics, transaction analytics, and detailed capture and analysis of CXL bring-ups are now available in versatile and intuitive test platforms.

Asistencia en cada paso

Proporcionamos soporte técnico, servicios, una formación completa y los recursos que necesita. Todo ello forma parte de lo que hacemos con el fin de maximizar el valor de su inversión en VIAVI.

Pregunte a un experto

Póngase en contacto con nosotros para obtener más información o recibir un presupuesto. Contamos con expertos que podrán proporcionarle las respuestas adecuadas a cualquier pregunta que le surja.