Hiperescala

Desafíos de las pruebas, recursos y soluciones

Ecosistemas hiperescala y VIAVI

VIAVI Solutions participa activamente en más de treinta organismos de normalización e iniciativas de código abierto, incluido el proyecto de infraestructura de telecomunicaciones Telecom Infra Project (TIP). Sin embargo, cuando los estándares no avanzan suficientemente rápido, nos anticipamos y desarrollamos equipos para someter a pruebas los estándares de infraestructura en evolución. Creemos en las API abiertas, de modo que las empresas hiperescala puedan continuar escribiendo su propio código de automatización.

La complejidad de la arquitectura hiperescala hace que las pruebas sean un aspecto esencial durante las fases de construcción, expansión y monitorización de las redes. Décadas de innovación, asociación y colaboración con más de 4000 clientes globales y organismos de normalización como la FOA (Fiber Optic Association, Asociación de Fibra Óptica) han cualificado a VIAVI de una manera única para abordar los singulares desafíos en materia de pruebas y garantización a los que se enfrentan los operadores hiperescala. Garantizamos el rendimiento del hardware óptico a lo largo del ciclo de vida del ecosistema de los centros de datos, desde el laboratorio hasta la puesta en marcha pasando por la monitorización.

¿Qué quiere decir “hiperescala”?

Por “hiperescala” se entiende la arquitectura de hardware y software que se emplea para crear sistemas informáticos altamente adaptables con una gran cantidad de servidores conectados juntos en red. La definición que proporciona IDC incluye un umbral mínimo de 5000 servidores en 10 000 pies cuadrados (929 metros cuadrados) o un espacio mayor.

La escalabilidad horizontal (ampliación) responde a la demanda rápidamente implementando o activando más servidores, mientras que la escalabilidad vertical (intensificación) aumenta la potencia, la velocidad y el ancho de banda del hardware existente. La alta capacidad que proporciona la tecnología hiperescala es ideal para la informática basada en la nube y las aplicaciones de macrodatos (big data). Las redes definidas por software (SDN) son otro ingrediente básico, junto con el equilibrio de carga especializado para dirigir el tráfico entre servidores y clientes.

Recursos hiperescala

- ¿Cómo funcionan las soluciones hiperescala?

Las soluciones hiperescala optimizan la eficacia del hardware con matrices de servidores masivas, y procesos informáticos diseñados para promover la flexibilidad y la escalabilidad. El equilibrador de carga monitoriza continuamente la carga de trabajo y la capacidad de cada servidor de manera que las nuevas solicitudes se enruten de la forma adecuada. La inteligencia artificial (IA) se puede aplicar al almacenamiento hiperescala, al equilibrio de carga y a los procesos de transmisión de la fibra óptica para optimizar el rendimiento y apagar equipos inactivos o que se estén utilizando poco. - Ventajas de las soluciones hiperescala

El tamaño asociado a la arquitectura hiperescala ofrece ventajas como una distribución eficaz de la alimentación y la refrigeración, cargas de trabajo equilibradas entre los servidores y redundancia integrada. Estos atributos también hacen que sea más sencillo almacenar, localizar y realizar copias de seguridad de grandes cantidades de datos. Para las empresas de los clientes, sacar partido de los servicios de los proveedores hiperescala puede resultar más rentable que adquirir y mantener sus propios servidores, además de un menor riesgo de inactividad. Esto puede reducir de forma significativa la demanda de tiempo del personal informático interno. - Desafíos de las soluciones hiperescala

Las economías de escala que proporciona la tecnología hiperescala también pueden suponer ciertos desafíos. Los volúmenes elevados de tráfico y los flujos complejos pueden dificultar la monitorización de los datos en tiempo real. La visibilidad del tráfico externo también puede complicarse debido a la velocidad y la cantidad de fibra y conexiones. Los problemas de seguridad se agudizan ya que la concentración de datos aumenta las consecuencias de cualquier infracción. La automatización y el aprendizaje automático (ML) son dos de las herramientas que se emplean para mejorar la capacidad de observación y la seguridad de los proveedores hiperescala.

Modelos hiperescala

La capacidad de coordinar de forma precisa la memoria, el almacenamiento y la asignación de potencia informática con los cambiantes requisitos de servicio es una característica que distingue a las soluciones hiperescala de los modelos tradicionales de centros de datos. La tecnología de máquinas virtuales (MV) permite a las aplicaciones de software moverse rápidamente de una ubicación física a otra. Los servidores de alta densidad, los nodos paralelos para mejorar la redundancia y la monitorización continua son otras características habituales. Dado que ninguna configuración de servidor o arquitectura única es ideal, se están desarrollando modelos nuevos para abordar las necesidades de los clientes, los proveedores de servicios y los operadores en desarrollo.

- ¿Qué es la “nube hiperescala”?

Internet y la informática basada en la nube han remodelado la forma en que los usuarios acceden a las aplicaciones y al almacenamiento de los datos. Al transferir estas funciones de servidores locales a centros de datos remotos gestionados por proveedores de servicios basados en la nube (CSP) de gran envergadura, las empresas pueden preparar la infraestructura a petición de los usuarios sin que ello afecte a las operaciones internas. Los proveedores de servicios basados en la nube hiperescala añaden un nivel gigante de capacidad y elasticidad de centros de datos a la oferta de servicios basados en la nube tradicionales, como el software como servicio (SaaS), la infraestructura como servicio (IaaS) y el análisis de datos. - Soluciones hiperescala frente a la informática basada en la nube

Aunque los términos se usan a veces indistintamente, las implementaciones basadas en la nube no siempre alcanzan esas proporciones tan enormes, y los centros de datos hiperescala pueden admitir mucho más que la informática basada en la nube. Las nubes públicas que se emplean para suministrar recursos a varios clientes a través de Internet (nube como servicio) a menudo se incluyen dentro de lo que se entiende por “hiperescala”, aunque las implementaciones de nubes privadas puedan ser relativamente pequeñas. Cuando un centro de datos empresarial creado y operado por una organización de modo que responde a sus propias necesidades de informática y almacenamiento incorpora un volumen elevado de servidores y funciones de orquestación avanzadas, el resultado se conoce como solución hiperescala empresarial. - Proveedores de servicios basados en la nube hiperescala

Los proveedores de servicios basados en la nube hiperescala invierten en gran medida en infraestructura y propiedad intelectual para responder a los requisitos de los clientes. La interoperabilidad cobra gran importancia en la medida en que los clientes demandan portabilidad entre plataformas estandarizadas. Los datos de Synergy Research Group demuestran que las organizaciones de mayor tamaño, Amazon Web Services (AWS), Microsoft y Google, son responsables de más de la mitad de todas las instalaciones. Estos tres proveedores están liderando también las iniciativas de planificación y desarrollo de centros de datos perimetrales hiperescala necesarias para responder a los requisitos evolutivos de la tecnología 5G y el Internet de las cosas (IoT). - Coubicación hiperescala

La coubicación permite a una empresa alquilar parte de un centro de datos existente en lugar de construir uno nuevo. La coubicación hiperescala puede crear sinergias útiles cuando sectores relacionados, como el comercio minorista y los proveedores mayoristas, se encuentran bajo un mismo techo. Los costos generales de suministro eléctrico, refrigeración y comunicación se reducen para cada uno de los inquilinos, al tiempo que se mejoran claramente los tiempos de actividad, la seguridad y la escalabilidad. Las empresas hiperescala pueden mejorar también su nivel de eficacia y rentabilidad alquilando el exceso de capacidad a los inquilinos. - Arquitectura hiperescala

Aunque no existe una definición establecida de la arquitectura hiperescala, entre sus características comunes se incluyen una capacidad de almacenamiento de petabytes (o superior), una capa discreta de software que lleva a cabo funciones de transferencia de datos y equilibrio de carga complejas, redundancia integrada y hardware de servidor genérico. A diferencia de los servidores que emplean los centros de datos privados pequeños, los servidores hiperescala emplean normalmente bastidores más anchos y están diseñados para admitir una reconfiguración más sencilla. Esta combinación de características elimina de manera eficaz la inteligencia del hardware, en lugar de depender de la automatización y de software avanzado para gestionar el almacenamiento de los datos y escalar las aplicaciones de forma rápida. - Hiperescala frente a hiperconvergente

A diferencia de la arquitectura hiperescala, que separa la informática de las funciones de almacenamiento y conexión en red, la arquitectura hiperconvergente integra estos componentes dentro de soluciones modulares previamente empaquetadas. Con elementos definidos por software creados en un entorno virtual, los tres componentes de un bloque hiperconvergente no se pueden separar. La arquitectura hiperconvergente facilita la ampliación o la reducción rápidas por medio de un único punto de contacto. Entre las ventajas adicionales, se incluye una combinación de funciones de compresión, cifrado y redundancia.

Conectividad hiperescala

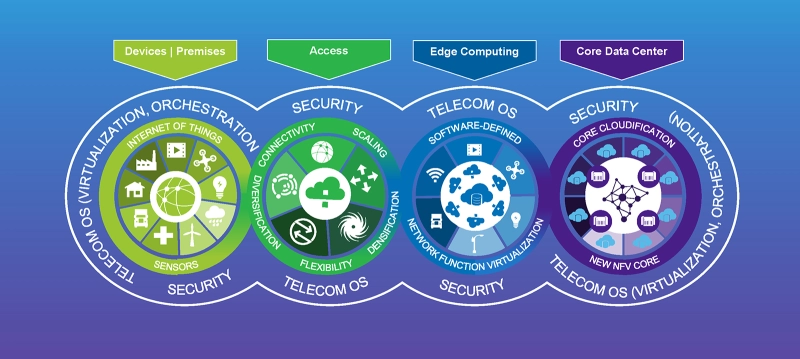

A medida que los modelos hiperescala se hacen más distribuidos, la informática perimetral y las estrategias basadas en varias nubes son cada vez más comunes. Se tiene que garantizar la conectividad de las conexiones físicas dentro del centro de datos, así como los enlaces de fibra óptica de alta velocidad que integran perfectamente las ubicaciones hiperescala.

- Las interconexiones de centros de datos (DCI) se utilizan para enlazar centros de datos masivos entre sí, además de centros de informática perimetral inteligentes de todo el mundo.

- Las pruebas de conexión de las DCI facilitan la instalación al comprobar rápidamente la capacidad y señalar de forma precisa fuentes de latencia y otros problemas.

- La modulación PAM4 y la corrección de errores de envío (FEC) añaden complejidad a las conexiones de DCI 400-800G Ethernet ultrarrápidas.

Una inspección automatizada de los conectores MPO que se completa en segundos, las pruebas simultáneas de varios puertos 400G u 800G, y la activación y la monitorización virtuales del servicio son algunas de las funciones de pruebas necesarias para mantenerse a la altura de la evolución de la arquitectura de interconexiones hiperescala.

Prácticas de pruebas hiperescala

Muchas prácticas de pruebas hiperescala para conexiones de fibra óptica, rendimiento de red y calidad del servicio continúan siendo coherentes con las pruebas convencionales de los centros de datos, solo que a una escala significativamente mayor. La confiabilidad de los tiempos de actividad cobra más importancia incluso cuando la complejidad de las pruebas aumenta. Las DCI que se realizan casi a capacidad total deben someterse a pruebas y monitorizarse de forma sistemática para comprobar el rendimiento y encontrar posibles problemas antes de que se produzca un fallo. Las soluciones de monitorización automatizada deben usarse para minimizar la demanda de recursos.

La personalización del hardware y el software de los centros de datos hacen que la interoperabilidad sea esencial para las soluciones de pruebas de primera categoría. Esto incluye herramientas de pruebas que admiten API abiertas para acomodar la diversidad hiperescala. Interfaces comunes como PCIe y MPO, que permiten una alta densidad y capacidad, son ahora más populares y requieren soluciones que las comprueben y las gestionen de forma eficaz.

- Los comprobadores de la tasa de errores de bits como MAP-2100 se han desarrollado específicamente para entornos en los que hay poco o ningún personal disponible para realizar las pruebas de las redes.

- Las soluciones de monitorización de redes concebidas para el ecosistema hiperescala pueden realizar pruebas de monitorización del rendimiento a gran escala de manera flexible desde diversos puntos de acceso físicos o virtuales.

- Las prácticas recomendadas de pruebas que se han definido para elementos como los conectores MPO y la fibra de cinta se pueden aplicar en una escala masiva dentro de las implementaciones.

Soluciones hiperescala

Las nuevas instalaciones de centros de datos continúan sumando complejidad, densidad y tamaño. Las soluciones y los productos para pruebas desarrollados originalmente para otras aplicaciones de fibra de alta capacidad se pueden usar también para comprobar y mantener el rendimiento hiperescala.

- Inspección de la fibra óptica: el alto volumen de conexiones de fibra óptica dentro y entre los centros de datos requiere herramientas de inspección de la fibra óptica confiables y eficaces. Una sola terminación contaminada, un defecto o una simple partícula pueden derivar en una pérdida por inserción y poner en riesgo el rendimiento de la red. Las mejores herramientas de inspección de la fibra óptica para aplicaciones hiperescala incluyen factores de forma compactos, rutinas de inspección automatizadas y compatibilidad con conectores multifibra.

- Monitorización de la fibra óptica: el aumento de la fibra óptica dentro del sector de los centros de datos hiperescala ha hecho que la monitorización de la fibra óptica sea una tarea difícil, pero esencial. Es primordial contar con herramientas versátiles para realizar pruebas de continuidad, medir la potencia óptica y llevar a cabo pruebas con OTDR. Esto es aplicable en todas las fases de la construcción, la activación y la solución de problemas. Los sistemas automatizados de monitorización de la fibra óptica como el sistema remoto de pruebas de fibra óptica (RFTS) de ONMSi pueden proporcionar una monitorización escalable y centralizada de la fibra óptica con alertas inmediatas.

- Conectores MPO: los conectores multifibra MPO (Multi-Fiber Push On) se usaban en otros tiempos principalmente para cables troncales densos. Actualmente, las limitaciones de densidad de las soluciones hiperescala han derivado en una rápida adopción de los conectores MPO para conexiones de conmutación, servidores y paneles de conexiones. Las pruebas y la inspección de la fibra óptica se pueden acelerar por medio de soluciones de pruebas de conectores MPO específicas con resultados automatizados de tipo pasa/falla.

- Transporte de alta velocidad de 400G y 800G: tecnologías emergentes como el IoT y las redes 5G con altas exigencias de ancho de banda han hecho que estándares Ethernet de vanguardia de alta velocidad como las tecnologías 400G y 800G sean esenciales para las soluciones hiperescala. Las herramientas de pruebas escalables y automatizadas pueden realizar pruebas avanzadas de tasas de error y capacidad para comprobar el rendimiento. El estándar del sector Y.1564 y los flujos de trabajo de activación del servicio de RFC 2544 evalúan también la latencia, las fluctuaciones y la pérdida de tramas a altas velocidades.

- Prueba virtual: los instrumentos portátiles para pruebas que en otros tiempos exigían la presencia de un técnico de redes en las instalaciones ahora pueden utilizarse de forma virtual a través del agente de pruebas Fusion, lo que reduce los requisitos en términos de recursos. La plataforma basada en el software Fusion se puede utilizar para monitorizar las redes y comprobar los SLA. Las tareas de activación de Ethernet como las pruebas de capacidad TCP de RFC 6349 también se pueden iniciar y ejecutar de manera virtual.

- Redes 5G: las redes 5G distribuidas y desagregadas traen consigo una mayor demanda de soluciones hiperescala y pruebas virtuales de las funciones, las aplicaciones y la seguridad de las redes. La solución TeraVM es una valiosa herramienta para validar las funciones de red tanto físicas como virtuales y emular millones de flujos de aplicaciones únicos para evaluar la calidad de la experiencia (QoE) general. Obtenga más información sobre nuestras completas soluciones de pruebas y validación RANtoCore™ de extremo a extremo.

- Capacidad de observación: el concepto de capacidad de observación está vinculado a conseguir niveles más profundos de información de las redes, lo que deriva en niveles más altos de rendimiento y confiabilidad. La plataforma Observer de VIAVI utiliza valiosas fuentes de datos de red para producir paneles flexibles e intuitivos, así como información útil. Esto tiene como resultado una resolución más rápida de los problemas, una mejor escalabilidad y una prestación del servicio optimizada. Obtenga más información sobre gestión del rendimiento y seguridad.

Qué ofrecemos

La diversa gama de productos para pruebas de VIAVI abarca todos los aspectos y las fases de la construcción, la activación y el mantenimiento de las soluciones hiperescala. A lo largo de la transición hacia la informática perimetral y centros de datos más grandes y más densos, la inspección de los conectores de fibra óptica ha continuado siendo una parte esencial de la estrategia general de las pruebas.

- Certificación: la rápida expansión de la utilización de los conectores MPO hacen de FiberChek Sidewinder una solución ideal para la certificación automatizada de terminaciones de varias fibras. Los equipos para pruebas de pérdida óptica (OLTS) diseñados específicamente para la interfaz MPO, como SmartClass Fiber MPOLx, también hacen que la certificación de la fibra óptica de nivel 1 sea más fácil y confiable.

- Pruebas de alta velocidad: las pruebas de red de transporte óptico (OTN) y la activación del servicio Ethernet se deben realizar de forma rápida y precisa para admitir la conectividad de alta velocidad de las soluciones hiperescala:

- El sistema MTS-5800 100G es un instrumento de pruebas 100G de puerto dual, resistente, compacto y líder en el sector para llevar a cabo las pruebas de fibra óptica, la activación del servicio y la solución de problemas. Esta versátil herramienta se puede utilizar para aplicaciones de redes metropolitanas y redes centrales, además de para pruebas de DCI.

- El sistema MTS-5800 admite la sistematicidad de las operaciones con métodos y procedimientos repetibles.

- El sistema OneAdvisor-1000, versátil y basado en la nube, lleva las pruebas de alta velocidad al siguiente nivel con una cobertura total de tasas y protocolos, conectividad nativa de modulación PAM4 y pruebas de activación del servicio para la tecnología 400G, además de para las tecnologías heredadas.

- Solución multifibra e integral: la arquitectura hiperescala crea un entorno ideal para las pruebas con OTDR automatizadas por medio de conexiones MPO. El módulo de conmutación MPO multifibra es una solución integral para entornos de fibra óptica de alta densidad dominados por conectores MPO. Cuando se usa junto con la plataforma de pruebas MTS, las fibras pueden caracterizarse por medio del OTDR sin necesidad de cables de convergencia de salida o de conexión que exigen mucho tiempo. Los flujos de trabajo de pruebas automatizadas para su certificación se pueden realizar para hasta 12 fibras a la vez.

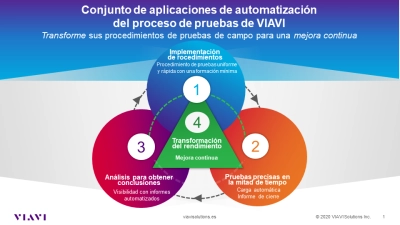

Pruebas automáticas: la automatización del proceso de pruebas (TPA) reduce los tiempos de construcción hiperescala, los procesos de pruebas manuales y las horas de formación. La automatización permite pruebas eficaces de la BER y la capacidad entre los centros de datos hiperescala, así como una verificación de extremo a extremo de segmentos de redes 5G de gran complejidad.

- El equipo de pruebas de pérdida óptica SmartClass Fiber MPOLx introduce la TPA en la certificación de la fibra óptica de nivel 1 con conectividad MPO nativa, flujos de trabajo automatizados y una visibilidad completa de ambos extremos del enlace. En menos de 6 segundos, se obtienen los resultados completos de las pruebas de 12 fibras.

- El medidor de fibra óptica portátil Optimeter hace que la opción de no realizar pruebas sea irrelevante, ya que completa una certificación de los enlaces de fibra con una pulsación y de forma totalmente automatizada en menos de un minuto.

- Monitorización de la fibra óptica independiente y remota: las soluciones de pruebas remotas y avanzadas son perfectas para entornos basados en la nube hiperescala automatizados y escalables. Con la monitorización de la fibra óptica de los eventos detectados, incluidas la degradación y las intrusiones en la fibra, se convierten rápidamente en alertas, de modo que se protegen los SLA y los tiempos de actividad de las DCI. El sistema remoto de pruebas de fibra óptica (RFTS) de ONMSi realiza barridos continuos de OTDR para detectar y predecir con precisión la degradación de la fibra óptica en la red. Los gastos operativos, el tiempo medio de reparación y los tiempos de inactividad de las redes de los centros de datos hiperescala se reducen de forma radical.

- Capacidad de observación y validación: los mismos avances de aprendizaje automático (ML), inteligencia artificial (IA) y virtualización de las funciones de red (NFV) que permiten las soluciones hiperescala basadas en la nube y la informática perimetral de las redes 5G están impulsando también las soluciones de pruebas hiperescala avanzadas.

- La plataforma Observer va más allá de la monitorización tradicional al convertir de forma inteligente los datos de flujo enriquecidos y los detalles de conversaciones de tráfico en evaluaciones de estado en tiempo real y valiosas puntuaciones de la experiencia de los usuarios finales.

- El flexible sistema basado en software TeraVM es perfecto para validar las funciones de las redes virtualizadas. Segmentos de red clave, incluidos el acceso, el backhaul de red móvil y la seguridad, se pueden validar completamente en el laboratorio, el centro de datos o la nube.

Documentos técnicos y bibliografía

Estudios de casos

Folletos

Notas de la aplicación

Pósteres

Seminario en línea

Déjenos ayudarle

Estamos a su disposición para ayudarle a avanzar.