Hyperscale

Testing Challenges, Resources, and Solutions

Hyperscale Ecosystems and VIAVI

VIAVI Solutions is an active participant in over thirty standards bodies and open-source initiatives including the Telecom Infra Project (TIP). But when standards don’t move quickly enough, we anticipate and develop equipment to test evolving infrastructure standards. We believe in open APIs, so hyperscale companies can continue to write their own automation code

The complexity of hyperscale architecture makes testing essential during network construction, expansion, and monitoring phases. Decades of innovation, partnership, and collaboration with over 4,000 global customers and standards bodies like the FOA have uniquely qualified VIAVI to address the unique test and assurance challenges faced by Hyperscalers. We guarantee performance of optical hardware over the lifecycle of the data center ecosystem, from lab to turn-up to monitoring.

What is Hyperscale?

Hyperscale refers the hardware and software architecture used to create highly adaptable computing systems with a large quantity of servers networked together. The definition provided by the IDC includes a minimum threshold of five thousand servers on a ten thousand square foot or larger footprint.

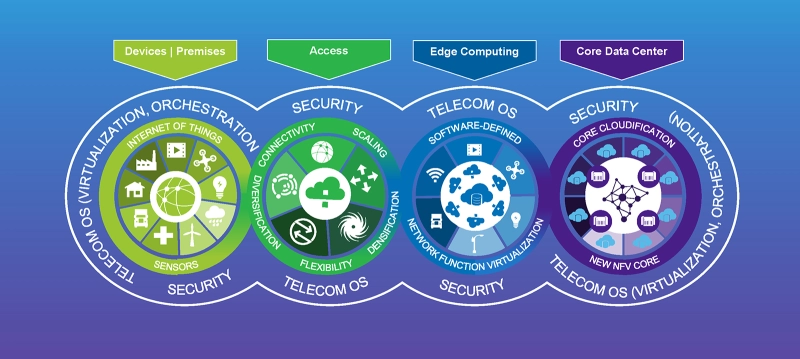

Horizontal scaling (scaling out) responds to demand quickly by deploying or activating more servers, while vertical scaling (scaling up) increases the power, speed, and bandwidth of existing hardware. The high capacity provided by hyperscale technology is well suited to cloud computing and big data applications. Software defined networking (SDN) is another essential ingredient, along with specialized load balancing to direct traffic between servers and clients.

Hyperscale Resources

- How does Hyperscale work?

Hyperscale solutions optimize hardware efficiency with massive server arrays and computing processes designed to promote flexibility and scalability. The load balancer continuously monitors the workload and capacity of each server so that new requests are routed appropriately. Artificial intelligence (AI) can be applied to hyperscale storage, load balancing, and optical fiber transmission processes to optimize performance and switch off idle or underutilized equipment. - Hyperscale Benefits

The size associated with hyperscale architecture provides benefits including efficient cooling and power distribution, balanced workloads across servers, and built-in redundancy. These attributes also make it easier to store, locate, and back up large amounts of data. For client companies, leveraging the services of hyperscale providers can be more cost-effective than purchasing and maintaining their own servers, with less risk of downtime. This can significantly reduce time demands for in-house IT personnel. - Hyperscale Challenges

The economies of scale that hyperscale technology provides can also lead to challenges. High traffic volumes and complex flows can make real-time data monitoring difficult. Visibility into external traffic can also be complicated by the speed and quantity of fiber and connections. Security concerns are heightened since the concentration of data increases the impact of any breach. Automation and machine learning (ML) are two of the tools being used to improve observability and security for hyperscale providers.

Hyperscale Models

The ability to precisely match memory, storage, and computing power allocation to changing service requirements is a characteristic that sets hyperscale apart from traditional data center models. Virtual machine (VM) technology allows software applications to be rapidly moved from one physical location to another. High-density servers, parallel nodes to improve redundancy, and continuous monitoring are other prevalent features. Since no single architecture or server configuration is ideal, new models are being developed to address evolving operator, service provider, and customer needs.

- What is Hyperscale Cloud?

The internet and cloud computing have reshaped the way applications and data storage are accessed by users. By transferring these functions from local servers to remote data centers managed by large cloud service providers (CSPs), businesses can ramp up infrastructure on demand without impacting in-house operations. Hyperscale cloud providers add a supersized level of data center capacity and elasticity to traditional cloud offerings such as software as a service (SaaS), infrastructure as a service (IaaS), and data analytics. - Hyperscale vs Cloud Computing

Although the terms are sometimes used interchangeably, cloud deployments do not always reach such massive proportions, and hyperscale data centers can support much more than cloud computing. Public clouds used to deliver resources to multiple customers over the internet (cloud as a service) often fall within the hyperscale definition, although private cloud deployments can be relatively small. When an enterprise data center built and operated by an organization to meet its own computing and storage needs incorporates high server volume and advanced orchestration capabilities, the result is known as enterprise hyperscale. - Hyperscale Cloud Providers

Hyperscale cloud providers invest heavily in infrastructure and intellectual property (IP) to meet customer requirements. Interoperability becomes important as customers look for portability across standardized platforms. Synergy Research Group data shows that the three largest players: Amazon Web Services (AWS), Microsoft, and Google, account for over half of all installations. These three providers are also leading planning and development efforts for hyperscale edge data centers needed to meet evolving 5G and IoT requirements. - Hyperscale Colocation

Colocation allows a business to rent part of an existing data center rather than building a new one. Hyperscale colocation can create useful synergies when related industries, such as retail and wholesale vendors, are under the same roof. Overall costs for power, cooling, and communication are reduced for each tenant while uptime, security, and scalability are markedly improved. Hyperscale companies can also improve their efficiency and profitability by leasing excess capacity to tenants. - Hyperscale Architecture

Although there is no set definition for hyperscale architecture, common characteristics include petabyte level (or greater) storage capacity, a discrete software layer performing complex load balancing and data transfer functions, built-in redundancy, and commodity server hardware. Unlike servers used by small private data centers, hyperscale servers typically use wider racks and are designed for easier reconfiguration. This combination of features effectively removes the intelligence from the hardware, instead relying on advanced software and automation to manage data storage and scale applications quickly. - Hyperscale vs Hyperconverged

Unlike hyperscale architecture which separates computing from storage and networking functions, hyperconverged architecture integrates these components within pre-packaged, modular solutions. With software-defined elements created in a virtual environment, the three components of a hyperconverged building block cannot be separated. Hyperconverged architecture makes it easier to scale up or down quickly through a single contact point. Additional benefits include bundled compression, encryption, and redundancy capabilities.

Hyperscale Connectivity

As hyperscale models become more distributed, edge computing and multi-cloud strategies are increasingly common. Connectivity must be assured for physical connections within the data center as well as the high-speed fiber links that seamlessly integrate hyperscale locations.

- Data center interconnects (DCIs) are used to link massive data centers to one another as well as intelligent edge computing centers around the world.

- DCI connection testing eases installation by quickly verifying throughput and accurately pinpointing sources of latency or other issues.

- PAM4 modulation and Forward Error Correction (FEC) add complexity to ultra-fast 400-800G Ethernet DCI connections.

Automated MPO connector inspection completed in seconds, simultaneous testing of multiple 400G or 800G ports, and virtual service activation and monitoring are some of the test capabilities required to keep pace with evolving hyperscale interconnect architecture.

Hyperscale Testing Practices

Many hyperscale testing practices for fiber connections, network performance, and service quality remain consistent with conventional data center testing, only on a significantly larger scale. Uptime reliability becomes more important even as the testing complexity grows. DCIs running close to full capacity should be tested and monitored consistently to verify throughput and find potential issues before a fault occurs. Automated monitoring solutions should be used to minimize resource demands.

The customization of data center hardware and software makes interoperability essential for premium test solutions. This includes test tools supporting open APIs to accommodate the hyperscale diversity. Common interfaces like PCIe and MPO that enable high density and capacity have grown in popularity and require solutions to efficiently test and manage them.

- Bit error rate testers like the MAP-2100 have been developed specifically for environments where little or no personnel is available to perform network tests.

- Network monitoring solutions intended for the hyperscale ecosystem can flexibly launch large-scale performance monitoring tests from multiple physical or virtual access points.

- Testing best practices defined for elements like MPO and ribbon fiber can be applied on a massive scale within deployments.

Hyperscale Solutions

New data center installations continue to add size, complexity, and density. Test solutions and products originally developed for other high-capacity fiber applications can also be used to verify and maintain hyperscale performance.

- Fiber Inspection: The high volume of fiber connections within and between data centers require reliable and efficient fiber inspection tools. A single particle, defect, or contaminated end-face can lead to insertion loss and compromised network performance. The best fiber inspection tools for hyperscale applications include compact form factors, automated inspection routines, and multi-fiber connector compatibility.

- Fiber Monitoring: The increase in fiber optics within the hyper scale data center industry has made fiber monitoring a difficult yet essential task. Versatile testing tools for continuity, optical loss measurement, optical power measurement, and OTDR are a must. This applies throughout all phases of construction, activation and troubleshooting activities. Automated fiber monitoring systems like the ONMSi Remote Fiber Test System (RFTS) can provide scalable, centralized fiber monitoring with immediate alerts.Image

- MPO: Multi-fiber push on (MPO) connectors were once used primarily for dense trunk cables. Today, hyperscale density constraints have led to rapid adoption of MPO for patch panel, server, and switch connections. Fiber testing and inspection can be accelerated through dedicated MPO test solutions with automated pass/fail results.

- High Speed Transport - 400G and 800G: Emerging technologies like the IoT and 5G with high bandwidth demands have made cutting-edge, high speed Ethernet standards like 400G and 800G essential to hyperscale solutions. Scalable, automated test tools can perform advanced error rate and throughput testing to verify performance. Industry standard Y.1564 and RFC 2544 service activation workflows also assess latency, jitter, and frame loss at high speeds.

- Virtual Test: Portable test instruments that once required a network technician on site can now be virtually operated through the Fusion test agent to reduce resource requirements. The software-based Fusion platform can be used to monitor networks and verify SLAs. Ethernet activation tasks such as RFC 6349 TCP throughput testing can also be virtually initiated and executed.

- 5G Networks: Distributed, disaggregated 5G networks bring more demand for hyperscale solutions and virtual testing of network functionality, applications, and security. The TeraVM solution is a valuable tool for validating both physical and virtual network functions and emulating millions of unique application flows to assess overall QoE. Learn more about our complete end-to-end RANtoCore™ testing and validation solutions.

- Observability: the concept of Observability is linked to achieving deeper levels of network insight which lead to higher levels of performance and reliability. The VIAVI Observer platform utilizes valuable network data sources to produce flexible, intuitive dashboards and actionable insights. This results in faster problem resolution, improved scalability, and optimized service delivery. Learn more about Performance Management & Security

What We Offer

The diverse VIAVI test product offerings cover all aspects and phases of hyperscale construction, activation, and maintenance. Throughout the transition towards larger and denser data centers and edge computing, fiber connector inspection has remained an essential part of the overall test strategy.

- Certification: The rapid expansion of MPO connector utilization makes the INX 760 Probe Microscope an ideal solution for automated multi-fiber end face certification. Optical loss test sets (OLTS) designed specifically for the MPO interface, such as the SmartClass Fiber MPOLx, also make Tier 1 fiber certification easier and more reliable.

- High-Speed Test: Optical Transport Network (OTN) testing and Ethernet service activation must be performed quickly and accurately to support the high-speed connectivity of hyperscale:

- The T-BERD 5800 100G (or MTS-5800 100G outside of the North American market) is an industry leading, ruggedized, and compact dual-port 100G test instrument for fiber testing, service activation, and troubleshooting. This versatile tool can be used for metro/core applications as well as DCI testing.

- The T-BERD 5800 supports consistency of operations with repeatable methods and procedures.

- The versatile, cloud-enabled OneAdvisor-1000 takes high speed testing to the next level with full rate and protocol coverage, PAM4 native connectivity, and service activation testing for 400G as well as legacy technologies.

- Multi-fiber, All-in-One: Hyperscale architecture creates an ideal setting for automated OTDR testing through MPO connections. The multi-fiber MPO switch module is an all-in-one solution for MPO dominated, high-density fiber environments. When used in conjunction with the T-BERD test platform, fibers can be characterized through OTDR without the need for time-consuming fan-out/break-out cables. Automated test workflows for certification can be performed for up to 12 fibers simultaneously.

- Automated Testing: Test process automation (TPA) reduces hyperscale construction times, manual test processes, and training hours. Automation enables efficient throughput and BER testing between hyperscale data centers as well as end-to-end verification of complex 5G network slices.

- The SmartClass Fiber MPOLx optical loss test set brings TPA to Tier 1 fiber certification with native MPO connectivity, automated workflows, and full visibility of both ends of the link. Comprehensive 12-fiber test results are delivered in under 6 seconds.

- The handheld Optimeter optical fiber meter makes the “no-test” option irrelevant by completing fully automated, one-touch fiber link certification in less than a minute.

- Standalone, Remote Fiber Monitoring: Advanced, remote test solutions are ideal for scalable, unmanned hyperscale cloud settings. With fiber monitoring, detected events including degradation, fiber-tapping, or intrusions are quickly converted to alerts, safeguarding SLA contracts and DCI uptime. The ONMSi Remote Fiber Test System (RFTS) performs ongoing OTDR “sweeps” to accurately detect and predict fiber degradation throughout the network. Hyperscale data center OpEx, MTTR, and network downtime are dramatically reduced.Observability and Validation: The same machine learning (ML), artificial intelligence (AI), and network function virtualization (NFV) breakthroughs that enable hyperscale cloud and edge computing for 5G are also driving advanced hyperscale test solutions.

- The Observer platform goes beyond traditional monitoring by intelligently converting enriched flow data and traffic conversation details into real-time health assessments and valuable end-user experience scoring.

- The flexible TeraVM software appliance is ideal for validating virtualized network functions. Key network segments including access, mobile network backhaul, and security can be fully validated in the lab, data center, or cloud.

Application Notes

Brochures

Case Studies

Posters

White Papers & Books

Let Us Help

We’re here to help you get ahead.